Using rubrics to plan and assess complex tasks and behaviors

A rubric is an easily applicable form of assessment. They are most commonly used in education, and offer a process for defining and describing the important components of work being assessed. They can help us plan and assess complex tasks (e.g. essays or projects) or behaviors (e.g. collaboration, team work). Increasingly rubrics are being used to help develop assessments in other areas such as community development and natural resource management.

In a recent report we describe how rubrics can be used to assess complex tasks and behaviours such as engagement and partnerships. More resources on rubrics can be found from the main Learning for Sustainability rubrics page – Rubrics as a learning and assessment tool for project planning and evaluation. Rubrics are both an instructional tool and a performance assessment tool. They act as a guide to help practitioners clarify and understand both the objectives required to complete any particular initiative and the qualities required for achieving high standards in those objectives.

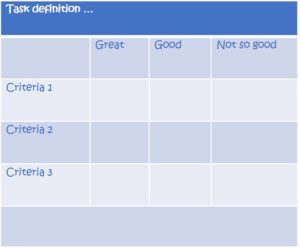

Although the format of a rubric can vary, they all have two key components:

- A list of criteria – or what counts in an activity or task

- Graduations of quality – to provide an evaluative range or scale.

Developing rubrics helps clarify the expectations that people have for different aspects of task or behavior performance by providing detailed descriptions of collectively agreed upon expectations. Well designed rubrics used for assessment increase the reliability and validity and ensure that the information gathered can be used to help people assess their management efforts, and improve them. It is different than a simple checklist since it also describes the gradations of quality (levels) for each dimension of the performance to be evaluated. It is important to involve program participants in developing rubrics and helping define and agree on the criteria and assessment. This broad involvement increase the likelihood that different evaluation efforts can provide comparable ratings. As a result, the assessments based on these rubrics will be more effective and efficient.

Involving people in developing rubrics involves a number of steps.

- Defining the task to be rated. This can include consideration of both outputs (things completed) and processes (level of participation, required behaviors, etc.).

- Defining criteria to be assessed. These should represent the component elements that are required for successful achievement of the task to be rated. The different parts of the task need to be set out simply and completely. This can often be started by asking participants to brainstorm what they might expect to see where/when the task is done very well … and very poorly.

- Developing scales which describe how well any given task or process has been performed. This usually involves selecting 3-5 levels. Scales can use different language such as:

– Advanced, intermediate, fair, poor

– Exemplary, proficient, marginal, unacceptable

– Well-developed, developing, under-developed

Co-developing rubrics in this way helps groups and teams clarify and negotiate the expectations that members people may have for different aspects of project performance. It is important to involve key stakeholders in developing the final versions of rubrics that may be used to assess their activities.By working together members can develop shared descriptions of collectively agreed-upon measures for key areas of performance. By involving stakeholders in helping define and agree on the criteria and assessment scales – as something they feel is achievable and within the limits of normal operations – the assessment is more likely to be used and acted upon by those involved.

Equally, it is not just a tick-box exercise. Assessments should be evidence-based, providing an ongoing process of learning and adaptive management that continues throughout the life of the initiative. Facilitated learning debriefs and After Action Reviews support a reflective process that can be used as a basis for supporting this adaptive management approach. In this manner, the rubric development and assessement process itself can contribute towards adapting key project tasks and behaviours in a way that grows and strengthens performance over time.

More resources on rubrics can be found from the main Learning for Sustainability rubrics page –Rubrics – as a learning and assessment tool for project planning and evaluation. More material can also be found from the indicators page. Other related PM&E resources can be found from the Theory of Change and Logic Modelling pages.

More information on my own use of rubrics can be found through recent research papers. One 2016 paper looks at the use of rubrics to support collaboration in an integrated research programme – Bridging disciplines, knowledge systems and cultures in pest management. Another recent book chapter (2018) looks at the Use of Rubrics to Improve Integration and Engagement Between Biosecurity Agencies and Their Key Partners and Stakeholders: A Surveillance Example.

[Note: This post is an update of an earlier 2016 version with the original title – Using rubrics to assess complex tasks and behaviors.]

An independent systems scientist, action research practitioner and evaluator, with 30 years of experience in sustainable development and natural resource management. He is particularly interested in the development of planning, monitoring and evaluation tools that are outcome focused, and contribute towards efforts that foster social learning, sustainable development and adaptive management.

Fabulous resource Will – an introduction to systems thinking, thank you.

I am restructuring our L7 Systems Thinking Practitioner training programme, and would like ot use this resource as part of our introductory module reading list, please would you confirm that you would be happy for me to include it in our resources for apprentices?

Also, might you be available for a chat about other resources in relation to systems thinking, please?

Biddy