Managing for outcomes: using logic modeling

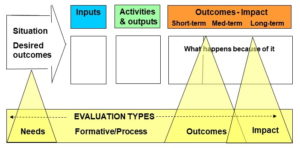

Logic models are narrative or graphical depictions of processes in real life that communicate the underlying assumptions upon which an activity is expected to lead to a specific result. They generally illustrate a sequence of cause-and-effect relationships, i.e. a systems approach to communicate the path toward a desired result. The model describes logical linkages among programme resources, activities, outputs, and audiences, and highlights different orders of outcomes related to a specific problem or situation. Importantly, once a programme has been described in terms of the logic model, critical measures of performance can be identified. In this way logic models can be seen to support both planning and evaluation. A good place to start is with these site blog introductions to developing a theory of change (ToC), using logic models, and working with outcomes. Below are annotated links to a number of intervention logic resources:

Impact evaluation of natural resource management research programs: a broader view

This report by John Mayne and Elliot Stern outlines impact evaluation strategies that accept that NRMR is likely to be a ‘contributory cause’ rather than the sole cause of program results. It builds on recent reports that demonstrate that, in many development settings, impact evaluation should be seen as contributing to an adaptive learning process that supports the successful implementation of innovative programs. Change is nearly always the result of a “causal package” and for an NRMR intervention to make a contribution it must be a necessary part of the package. The report highlights the importance of “intermediate outcomes” and “theories of change”.

Setting outcomes, and measuring and reporting performance of regional council pest and weed management programmes

This report by Chris Jones, Phil Cowan & Will Allen was developed through a project to provide local government with the capability to set measurable outcomes for biosecurity programs and to identify the best indicators with which to measure progress towards those outcomes. It aims to help them better structure, measure and report on the performance of biosecurity programs. The principles and protocols described are applicable to most other areas of local government and agency activity. More resources related to this work can be accessed from the Landcare Research site.

Developing and using program logic in natural resource management

This guide by Alice Roughley outlines a step-by-step process for developing program logic in the context of Natural Resource Management (NRM). The guide is aimed at those who are developing a program logic for the first time and may also be helpful to other users in a range of contexts. In this guide, the term “program” covers all levels of intervention, whether through a project, program, strategy or activity, as well as program design and evaluation. Through a series of exercises, templates and checklists, this guide outlines the key steps in developing a program logic. It points to key steps to develop a program logic being: scoping/defining the program boundaries; developing an outcomes hierarchy and expectations about change; articulating and documenting assumptions and theory of change; and formulating evaluation questions, program contribution and audiences.

Handbook on planning, monitoring and evaluating for development results

This 2009 version of the UNDP Handbook on Planning, Monitoring and Evaluating for Development Results recognizes that planning, monitoring and evaluation require a focus on nationally owned development priorities and results. It recognizes that results planning is a prerequisite for effective programme design, monitoring and evaluation, and integrates planning, monitoring and evaluation in a single guide. Second, it reflects the requirements and guiding principles of a cycle of planning, monitoring and evaluation. Third, it includes a comprehensive chapter on evaluation design for quality assurance.

Logic models

This website from the University of Wisconsin has an example of a logic model and as well tools for creating a logic model in PDF, Word and Excel formats. These tools, which come with instructions, can be downloaded and used for your organization.

Introducing program teams to logic models: Facilitating the learning process

A good introduction to developing a logic model from Nancy Porteous and colleagues. This Research and Practice Note provides the key content, step-by-step facilitation tips, and case study exercises for a half-day logic model workshop for managers, staff, and volunteers. Included are definitions, explanations, and examples of the logic model and its elements, and an articulation of the benefits of the logic model for various planning and evaluation purposes for different audiences.

Enhancing Program Performance with Logic Models

This course from the University of Wisconsin extension service introduces a holistic approach to planning and evaluating education and outreach programs. Module 1 helps program practitioners use and apply logic models. Module 2 applies logic modeling to a national effort to evaluate community nutrition education.

A Guide to Developing Public Health Programmes: A generic programme logic model. Occasional Bulletin No. 35

This 2006 guide by John Wren is to help people design and implement comprehensive, effective and measurable public health programmes that will deliver improved public health outcomes. The guide describes a generic programme logic model and checklist that are designed to guide people through the steps of developing a thorough public health programme.

CES Planning Triangle

The CES Planning Triangle highlights impact, outcomes and outputs. In this way it seems similar to a logic model and can be a useful tool to help you picture what you do and why. The CES Planning Triangle does this by helping an organisation or project to organise its aims and objectives. It helps you to see how what your organisation does (your objectives) can lead to changes (your aims) by showing how they relate to the impact, outcomes and outputs of your work.

Outcomes mapping and harvesting

Outcome Mapping focuses on one particular category of results – changes in the behaviour of people, groups, and organizations with whom a program works directly. These changes are called ‘outcomes’. Through Outcome Mapping, development programs can claim contributions to the achievement of outcomes rather than claiming the achievement of development impacts. Although these outcomes, in turn, enhance the possibility of development impacts, the relationship is not necessarily one of direct cause and effect. Instead of attempting to measure the impact of the program’s partners on development, Outcome Mapping concentrates on monitoring and evaluating its results in terms of the influence of the program on the roles these partners play in development.

Outcome Mapping: Building learning and reflection into development programs

This manual by Sarah Earl, Fred Carden, and Terry Smutylo is intended as an introduction to the theory and concepts of Outcome Mapping. Section 1 presents the theory underpinning Outcome Mapping – its purpose and uses, as well as how it differs from other approaches to monitoring and evaluation in the development field, such as logic models. Section 2 presents an overview of the workshop approach to Outcome Mapping – including the steps of the workshop, as well as how to select participants and facilitators. Sections 3, 4, and 5 outline each of the stages of an Outcome Mapping workshop, suggest a process that can be followed by the facilitator and provide examples of the finished ‘products’.

Outcome Harvesting

Outcome Harvesting is a utilization-focused, highly participatory tool that enables evaluators, grant makers, and managers to identify, formulate, verify, and make sense of outcomes they have influenced when relationships of cause-effect are unknown. This is the original Ford Foundation report by Ricardo Wilson-Grau and Heather Britt. Unlike some evaluation methods, Outcome Harvesting does not measure progress towards predetermined outcomes or objectives, but rather collects evidence of what has been achieved, and works backward to determine whether and how the project or intervention contributed to the change. This brief is intended to introduce the concepts and approach used in Outcome Harvesting to grant makers, managers, and evaluators, with the hope that it may inspire them to learn more about the method and apply it to appropriate contexts.

Retrospective ‘Outcome Harvesting’: Generating robust insights about a global voluntary environmental network

This 2013 article is written by Kornelia Rassmann, Richard Smith, John Mauremootoo and Ricardo Wilson-Grau. It discusses three aspects of evaluation practice: i) how Outcome Harvesting, complemented by interviews, was employed as a practical way to identify and evaluate the (sometimes unanticipated) outcomes of an extensive network; ii) what was undertaken to ensure validity and credibility of the outcomes met the needs of the primary intended users of the evaluation; and iii) how the challenges of using a time-intensive methodology in a time-deficient situation were addressed.

Often people talk about logic models and theory of change processes interchangeably, Logic models – such as the ones above – connect programmatic activities to client or stakeholder outcomes. But a theory of change goes further, specifying how to create a range of conditions that help programs deliver on the desired outcomes. These can include setting out the right kinds of partnerships, types of forums, particular kinds of technical assistance, and tools and processes that help people operate more collaboratively and be more results focused. You may also be interested in the related topic of indicator development. Another related page can be found in the knowledge management section with links on how best to develop conceptual models.